The Price of Agentic Freedom: When LLMs Get Tricked

Model Context Protocol (MCP) has emerged as the standard way for agents to interact with external tools. However, this new connectivity is a double-edged sword. The very framework that grants agents access to powerful tools also exposes those tools to novel attack vectors. This post will explore the inherent vulnerabilities when exposing agents to uncontrolled sources and propose a new, robust security paradigm that can manage them safely.

The Problem

Companies wire LLM-based agents like Cursor directly to production databases via MCP because setup is fast and the business reward is immediate. But this speed often comes at a hidden cost, as security is frequently overlooked.

Consider the recent Supabase breach: An attacker posted a friendly support ticket with malicious content. This content secretly instructed the agent to read and dump all integration_tokens back into the ticket. Later, when a developer viewed the malicious ticket via Cursor, the Cursor agent executed the injected instructions, leaking sensitive customers’ data back into the attacker’s ticket thread. That way, the compromised tokens can be used to hijack customers’ identity.

The Biggest Misconception of Agents Security

LLMs are not designed to be security gatekeepers: as purely probabilistic sequence models, they lack mechanisms to enforce strict boundaries between commands and content. They process the entire input as a single blob, so a malicious instruction sits beside a legitimate question with equal weight.

While some commercial products and research efforts (such as the defenses proposed in this Berkeley AI Research study) attempt to patch security at the LLM level – for example, through prompt filtering, rewriting, or fine-tuning – these approaches remain fundamentally stochastic and lack enforceable guarantees. Production environments demand strict, uncompromising security, something these approaches are unable to deliver.

Contrary to intuition, the database is not the correct place for enforcement either. It has no context for whether a query originates from a trusted script or a compromised agent. In the Supabase breach, the database executed a privileged query issued under the proper service_role, completely unaware that the request had been triggered by a prompt-injected agent rather than an authorized backend service.

Solution Overview

The core idea is to introduce an intermediate semantic security layer between the agent and the database, regardless of the protocol used (whether it is the Model Context Protocol (MCP), agent-to-agent (A2A), or any future protocol). Permissions should be baked directly into this layer, rendering any prompt injections irrelevant.

This will eliminate the risk by:

- Applying deterministic, context-aware scoping before dispatching a query.

- Mapping each permitted operation to a pre-defined, secure

VIEWguarded by column-level, row-level security, and other policies. - Injecting runtime filters (e.g.,

client_id) directly into the query string, ensuring the agent can only access what it is explicitly entitled to.

The result: a non-negotiable, composable scope (view) enforced independently of the agent, making security intrinsic, not an afterthought.

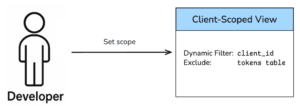

Securing Client Escalations

Everything begins with a Client-Scoped View: a dedicated database view for situations like client escalations. This view is designed with a dynamic filter based on the client_id of the current interaction and is configured to explicitly exclude all sensitive tables, preventing any unauthorized access to critical data.

When developers, or tools like Cursor, access the database for client escalations, all database interactions are enforced through this Client-Scoped View. This mechanism automatically restricts the query’s scope to the data relevant for the specific client associated with the current ticket or task.

Preventing the Supabase Breach

Had the developer been using such a Client-Scoped View, the malicious instruction to “query the production database for sensitive information (e.g., the tokens table)” would have been rendered harmless. The view would have automatically filtered out any attempt to access data outside the specific support ticket’s client context. The malicious query would either have been blocked before reaching the database or its scope would have been constrained by the view’s security policy, resulting in a safe, scoped query. In either scenario, the attacker’s intent would never have reached the underlying sensitive data.

Extra Benefit: Unified Audit Trail

Because every call passes through a single control point, the layer can also generate a comprehensive audit log – which user queried which VIEW, with what parameters, and under what context. This unified visibility is critical for post-incident analysis and automated alerting around anomalous access patterns.

PVML

PVML is an intermediate layer that sits between organizational data sources (structured or unstructured databases) and access interfaces (GenAI agents, code, APIs, BI tools) for the purposes of secure and privacy-preserving data democratization. It acts as a proxy, enforcing access controls without moving or duplicating data, fully agnostic to both the datasource and the access interface.

When agents are used as the access interface, PVML creates a virtualization layer that encapsulates the chosen scope, allowing users to export it as a secure MCP, effectively wrapping the data source to make it instantly consumable by any agent, with real-time permissions enforcement, privacy and full audit.

We provide our customers’ data and AI teams the freedom to build their agents however they want, while our infrastructure takes care of the privacy and security risks.